!pip install kaggle >> /dev/nullLesson Video:

So far

We’ve learned how to:

- Load some data in

- Create a basic

DataBlock - Create a

vision_learner - Train a model

Let’s now apply this to a non-fastai dataset, the Clothing dataset

Let’s download the dataset

To download the dataset, you will need kaggle installed and have a kaggle.json stored in ~/.kaggle. You can get your token by going to Your Profile -> Account -> Create New API Token.

If you don’t have a Kaggle account, you can make one for free here

And run the API command provided:

!kaggle datasets download -d agrigorev/clothing-dataset-fullIt’s now been saved to the clothing-dataset-full.zip file here on our local system:

from fastcore.xtras import Path

zip_path = Path("../clothing-dataset-full.zip")

zip_path.exists()TrueLet’s go ahead and unzip this with zipfile:

import zipfile

with zipfile.ZipFile(zip_path, "r") as zip_ref:

zip_ref.extractall("../data")import zipfile

with zipfile.ZipFile(zip_path, "r") as zip_ref:

zip_ref.extractall("../data")with zipfile.ZipFile(zip_path, 'r'): This is a context manager pointing at our zip_path and opens it as a data stream to read in from.

zip_ref.extractall(data)This will extract all of our data into a new directory 'data'

Let’s look in the folder:

data_path = Path("../data")

data_path.ls()(#3) [Path('../data/images_original'),Path('../data/images.csv'),Path('../data/images_compressed')]data_path = Path("../data")

data_path.ls()(#3) [Path('../data/images_original'),Path('../data/images.csv'),Path('../data/images_compressed')]data_path.ls()fastcore adds a number of monkey-patch improvements to existing classes, which is why we imported from fastcore.xtras import Path and not from pathlib import Path. Being able to run ls() using a Path is one of the perks.

We see a file named images.csv, compressed folder, and original images

Exploring the dataset

The first thing a good Data Scientist should do is look at the data. Especially when we want to fit it into the fastai framework. Let’s first investigate these compressed vs original images.

Images

We’ll import the vision library from fastai so that we have some useful imports already existing for us:

from fastai.vision.all import *Next let’s look at the folder’s contents:

(data_path/"images_compressed").ls()[:3](#3) [Path('../data/images_compressed/2cd28b7c-b772-4dfa-9336-d08b14e41444.jpg'),Path('../data/images_compressed/01d1fed7-996d-496b-b3ae-73ab724f29cc.jpg'),Path('../data/images_compressed/10dd86f3-6525-49bc-9c28-e3e9b7a29b64.jpg')](data_path/"images_compressed").ls()[:3](#3) [Path('../data/images_compressed/2cd28b7c-b772-4dfa-9336-d08b14e41444.jpg'),Path('../data/images_compressed/01d1fed7-996d-496b-b3ae-73ab724f29cc.jpg'),Path('../data/images_compressed/10dd86f3-6525-49bc-9c28-e3e9b7a29b64.jpg')](data_path/images_compressed).ls()When you want to use a function that is determinstic by the output of whatever came before it, you can wrap it in parenthesis and call the subsequent class’ function afterwards, kind of like PEMDAS

As expected, a variety of images. Let’s open one up and look at it:

im_path = (data_path/"images_compressed").ls()[0]

im = Image.open(im_path)

im

We can also check it’s size:

im.shape(400, 400)Let’s see how that compares to a bigger sized image:

im_path = (data_path/"images_original").ls()[0]

im = Image.open(im_path);im.shape(3088, 3088)Way bigger. We’ll train on the smaller dataset so it’s faster.

Now that we know how the images are laid out, we need to understand how the data is labeled.

Corrupted Data

Sometimes not all images will be openable and the dataset will have corrupt items. The following loop will check for that:

bad_imgs = []

for im in (data_path/"images_compressed").ls():

try:

_ = Image.open(im)

except:

bad_imgs.append(im)

im.unlink()bad_imgs = []

for im in (data_path/"images_compressed").ls():

try:

_ = Image.open(im)

except:

bad_imgs.append(im)

im.unlink()for im in (data_path/"images_compressed").ls():First we iterate over every image in the images_compressed folder.

try:

_ = Image.open(im)Then we attempt to open the image using PIL.Image.open

except:

bad_imgs.append(im)

im.unlink()If it fails then we add it to the list of bad images and unlink (remove) the image.

len(bad_imgs)6DataFrame

We’ll load the csv file we saw with pandas:

df = pd.read_csv(data_path/'images.csv')

df.head()| image | sender_id | label | kids | |

|---|---|---|---|---|

| 0 | 4285fab0-751a-4b74-8e9b-43af05deee22 | 124 | Not sure | False |

| 1 | ea7b6656-3f84-4eb3-9099-23e623fc1018 | 148 | T-Shirt | False |

| 2 | 00627a3f-0477-401c-95eb-92642cbe078d | 94 | Not sure | False |

| 3 | ea2ffd4d-9b25-4ca8-9dc2-bd27f1cc59fa | 43 | T-Shirt | False |

| 4 | 3b86d877-2b9e-4c8b-a6a2-1d87513309d0 | 189 | Shoes | False |

df = pd.read_csv(data_path/'images.csv')

df.head()| image | sender_id | label | kids | |

|---|---|---|---|---|

| 0 | 4285fab0-751a-4b74-8e9b-43af05deee22 | 124 | Not sure | False |

| 1 | ea7b6656-3f84-4eb3-9099-23e623fc1018 | 148 | T-Shirt | False |

| 2 | 00627a3f-0477-401c-95eb-92642cbe078d | 94 | Not sure | False |

| 3 | ea2ffd4d-9b25-4ca8-9dc2-bd27f1cc59fa | 43 | T-Shirt | False |

| 4 | 3b86d877-2b9e-4c8b-a6a2-1d87513309d0 | 189 | Shoes | False |

pd.read_csvWe are reading in a CSV file, so we use the pandas (pd) read_csv constructor and pass in the path

df.head()head will print the first n rows of a pandas DataFrame. By default it’s 5

Pandas supports reading in a wide variety of data structs, including Excel!

We can see the label and image columns, which correspond to our data, but something doesn’t look right does it. That “Not sure” label. Let’s see how many there are:

len(df[df["label"] == "Not sure"]), len(df)(228, 5403)len(df[df["label"] == "Not sure"]), len(df)(228, 5403)df[label]This first part returns a mask of where the condition is met, think an array of True or False

df[...]This next part indexs into the array based on the mask inside of it, everywhere "label" was "Not sure"

A good chunk of our data is messy! We should drop those rows

To select the inverse of a mask, use the ~:

len(df[~(df["label"] == "Not sure")])5175clean_df = df[~(df["label"] == "Not sure")]

clean_df.head()| image | sender_id | label | kids | |

|---|---|---|---|---|

| 1 | ea7b6656-3f84-4eb3-9099-23e623fc1018 | 148 | T-Shirt | False |

| 3 | ea2ffd4d-9b25-4ca8-9dc2-bd27f1cc59fa | 43 | T-Shirt | False |

| 4 | 3b86d877-2b9e-4c8b-a6a2-1d87513309d0 | 189 | Shoes | False |

| 5 | 5d3a1404-697f-479f-9090-c1ecd0413d27 | 138 | Shorts | False |

| 6 | b0c03127-9dfb-4573-8934-1958396937bf | 138 | Shirt | False |

Finally we need to make sure that none of the bad image names are in our dataframe

for img in bad_imgs:

clean_df = clean_df[clean_df["image"] != img.stem]img.stem here is a pathlib.Path property where stem is the prefix to the filename, or everything before the “.”

len(clean_df)5170Great! Now let’s see what our classes are:

clean_df["label"].unique(), len(clean_df["label"].unique())(array(['T-Shirt', 'Shoes', 'Shorts', 'Shirt', 'Pants', 'Skirt', 'Other',

'Top', 'Outwear', 'Dress', 'Body', 'Longsleeve', 'Undershirt',

'Hat', 'Polo', 'Blouse', 'Hoodie', 'Skip', 'Blazer'], dtype=object),

19)clean_df["label"].unique(), len(clean_df["label"].unique())(array(['T-Shirt', 'Shoes', 'Shorts', 'Shirt', 'Pants', 'Skirt', 'Other',

'Top', 'Outwear', 'Dress', 'Body', 'Longsleeve', 'Undershirt',

'Hat', 'Polo', 'Blouse', 'Hoodie', 'Skip', 'Blazer'], dtype=object),

19).unique()This will list out all the unique classes in our DataFrame

Much like our PETs classification problem, we’ll be classifying 19 different clothing items

Can we do fastai already?

So let’s go over what we know again:

- Our data is stored in folders named “images_compressed”

- To get the label from an image, we need to read it in through a pandas DataFrame

- There are 19 different clothing items we’re trying to classify.

Now that our problem is setup, let’s see how it can fit into the DataBlock API:

Building the DataBlock and DataLoaders

First, define the blocks:

blocks = (ImageBlock, CategoryBlock)blocks = (ImageBlock, CategoryBlock)(ImageBlock, CategoryBlock)This problem has an image going in, and a category going out

Then how to get our data:

get_x = ColReader("image", pref=(data_path/"images_compressed"), suff=".jpg")

get_y = ColReader("label")get_x = ColReader("image", pref=(data_path/"images_compressed"), suff=".jpg")

get_y = ColReader("label")ColReader(label)We utilize the ColReader class here, which knows to read in from a Pandas column

ColReader(... pref=.., suff=..)add it in on the fly rather than having to mess with the DataFrame

Next our Transforms:

item_tfms = [Resize(224)]

batch_tfms = [*aug_transforms(), Normalize.from_stats(*imagenet_stats)]And finally build our DataBlock:

dblock = DataBlock(

blocks=blocks,

get_x=get_x,

get_y=get_y,

item_tfms=item_tfms,

batch_tfms=batch_tfms

)All that’s left is to convert them to dataloaders:

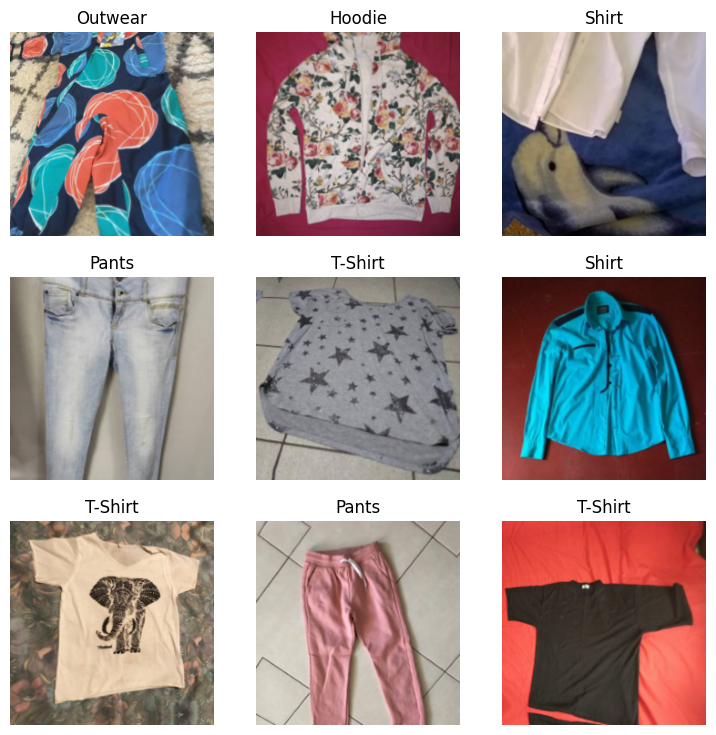

dls = dblock.dataloaders(clean_df)dls.show_batch()

Great! Our data looks good to train on.

Creating a Learner and fine-tuning

Now let’s create the vision_learner and fine-tune:

learn = vision_learner(dls, resnet34, metrics=accuracy)

learn.fine_tune(5)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 2.414496 | 1.025276 | 0.713733 | 00:20 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 1.154287 | 0.706250 | 0.796905 | 00:26 |

| 1 | 0.829337 | 0.676548 | 0.812379 | 00:25 |

| 2 | 0.595276 | 0.636301 | 0.825919 | 00:25 |

| 3 | 0.399195 | 0.584930 | 0.841393 | 00:25 |

| 4 | 0.297942 | 0.586610 | 0.847195 | 00:25 |

And we’re done!

Next week we’ll look at the low-level API with MNIST, building a model from scratch, and start to peel away some of the layers of fastai and think of it more as an extension to PyTorch